Pwning Claude Code: Researchers Discover Eight Attack Vectors

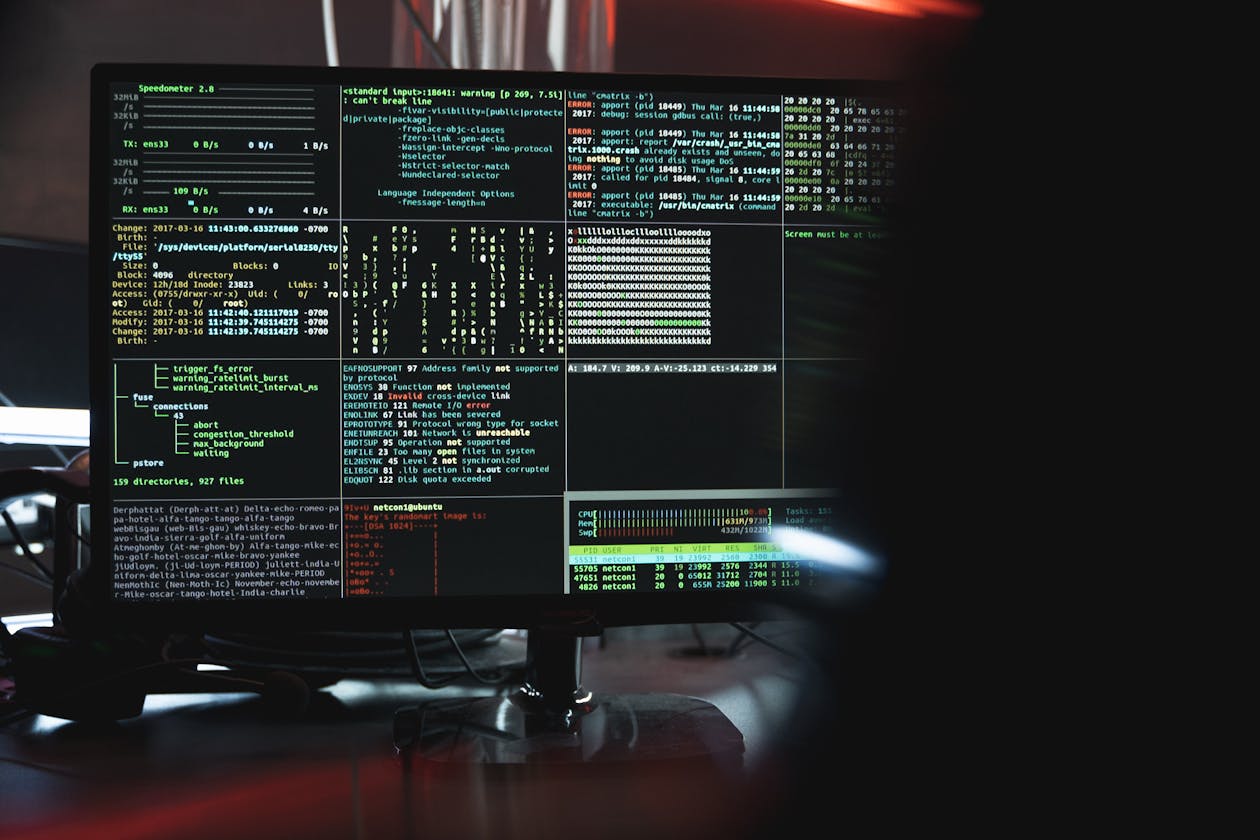

The research demonstrates that Claude Code inherits the fundamental vulnerability of AI coding assistants: inability to reliably distinguish between legitimate instructions and adversarial content embedded in code repositories. Attackers can plant malicious instructions in various file types including markdown documentation, code comments, configuration files, and even seemingly innocuous data files that Claude Code processes during normal operations.

Flatt Security identified attack vectors ranging from direct prompt injection in repository files to more sophisticated techniques exploiting Claude Code tool use capabilities. The attacks can result in arbitrary command execution on developer machines, exfiltration of sensitive data including credentials and API keys, modification of source code to introduce backdoors, and manipulation of development workflows.

Particularly concerning is the potential for supply chain attacks where compromised dependencies contain hidden instructions that activate when developers use Claude Code to work with the codebase. The research shows that even brief exposure to malicious repository content can compromise a development session, with effects potentially persisting across multiple interactions.

Anthropic has acknowledged the research and implemented additional safeguards in Claude Code. Security experts recommend that developers exercise caution when using AI coding assistants with untrusted repositories, implement code review processes for AI-generated changes, and maintain awareness that repository content can influence AI assistant behavior in unexpected ways.