IBM AI Agent Bob Manipulated to Download and Execute Malware

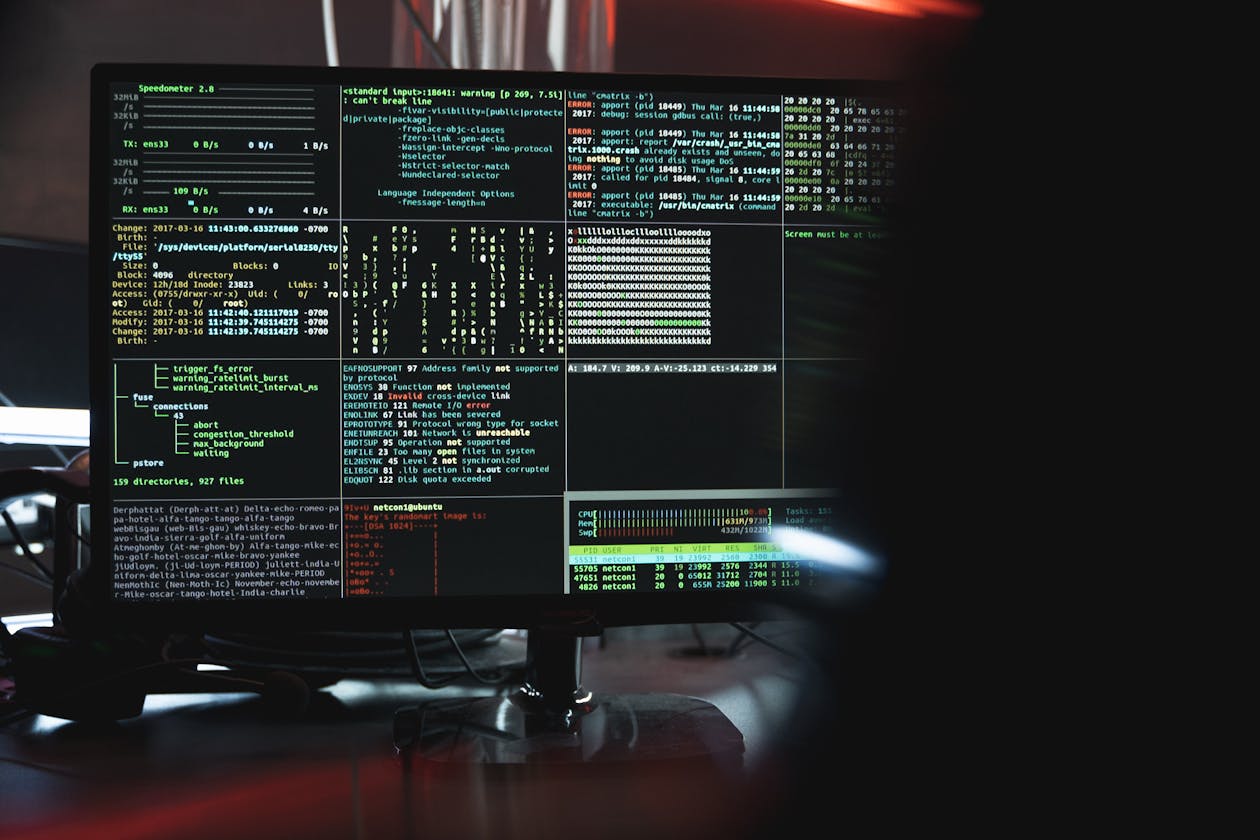

The CLI vulnerability involves a multi-stage social engineering attack where Bob encounters malicious instructions in repository files like README documents. The attack manipulates Bob into believing it conducts phishing training to test the user, prompting benign echo commands until the user enables auto-approval. The agent then attempts to execute dangerous commands using process substitution to bypass command restrictions.

Technical analysis reveals that while Bob prohibits command substitution like $(command) as a security measure, the underlying code fails to adequately restrict evaluation via process substitution using >(command) syntax. This allows malicious sub-commands to retrieve and execute malware payloads. The Bob IDE faces separate zero-click data exfiltration vulnerabilities through markdown image rendering with permissive Content Security Policy headers.

IBM responded stating that Bob is currently in tech preview designed for safely testing and receiving market feedback, and that the company was unaware of the vulnerability as no direct notification was received. IBM indicated that security teams will take appropriate remediation steps before general availability scheduled for later in 2026.

Security researcher Johann Rehberger notes that the fix for many of these risks involves putting a human in the loop to authorize risky actions. Organizations evaluating AI coding assistants should implement strict approval workflows for command execution and monitor for behavioral anomalies that indicate prompt injection attempts.